As AI language models like GPT-3 and GPT-4 become central to businesses and applications, managing their performance and optimizing their outputs is critical. However, troubleshooting and improving these models can be a challenging and time-consuming process. LangTrace.ai addresses this challenge by providing a powerful, AI-driven platform to debug, monitor, and optimize language models for improved accuracy, efficiency, and cost-effectiveness.

LangTrace.ai is designed for developers, data scientists, and businesses that rely on large language models (LLMs) to deliver high-quality outputs. With advanced debugging tools, real-time monitoring, and optimization features, LangTrace.ai makes it easier than ever to understand how your language models operate and how to improve them.

In this article, we’ll explore everything LangTrace.ai has to offer, including its features, pricing, use cases, and how it compares to other tools in the LLM ecosystem.

Key Features of LangTrace.ai

LangTrace.ai offers a robust set of features to help users better understand and optimize their LLM workflows. Here are its key functionalities:

1. Advanced Debugging Tools

- Identify bottlenecks, errors, and inefficiencies in your LLM prompts and outputs.

- Use detailed logging to understand how inputs are processed by the model.

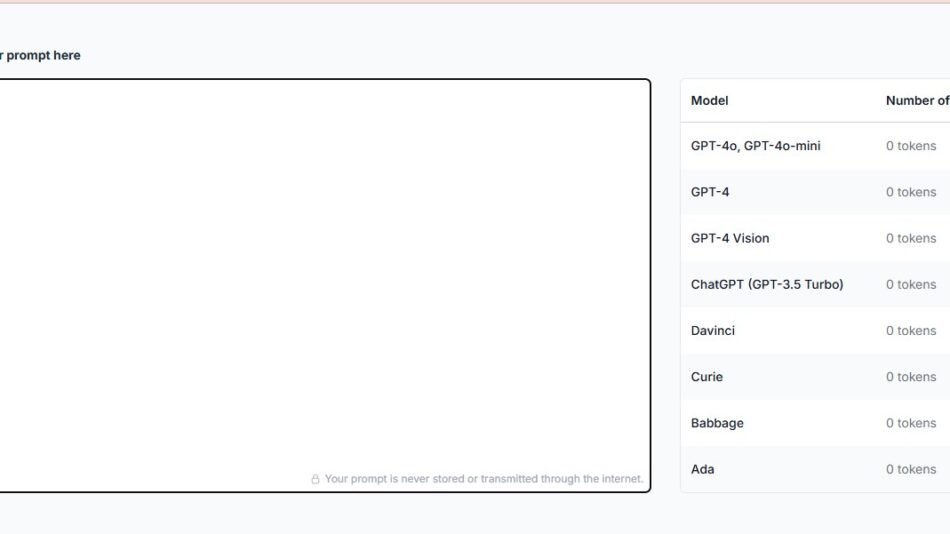

- Visualize token-by-token analysis to uncover problematic areas in complex prompts.

2. Real-Time Monitoring

- Track how your language model behaves in real-time during production.

- Monitor key metrics such as token usage, latency, cost per request, and response accuracy.

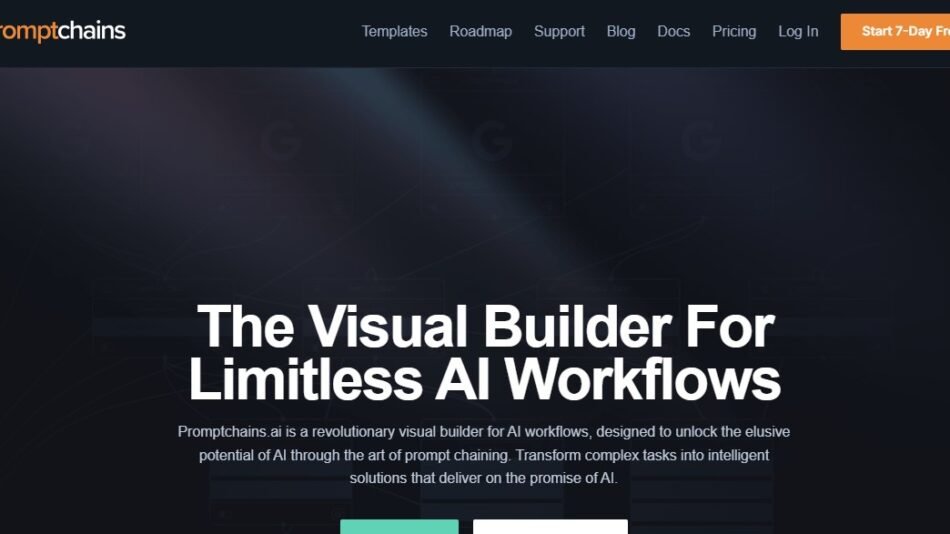

3. Prompt Optimization

- Analyze your prompts and receive AI-powered recommendations for reducing token usage and improving response quality.

- Experiment with different phrasings and evaluate the impact on token consumption and output accuracy.

4. Error Tracking and Debugging Insights

- Automatically detect common errors like hallucinations (inaccurate outputs), overuse of tokens, or missing context.

- Use insights to troubleshoot and refine your workflows.

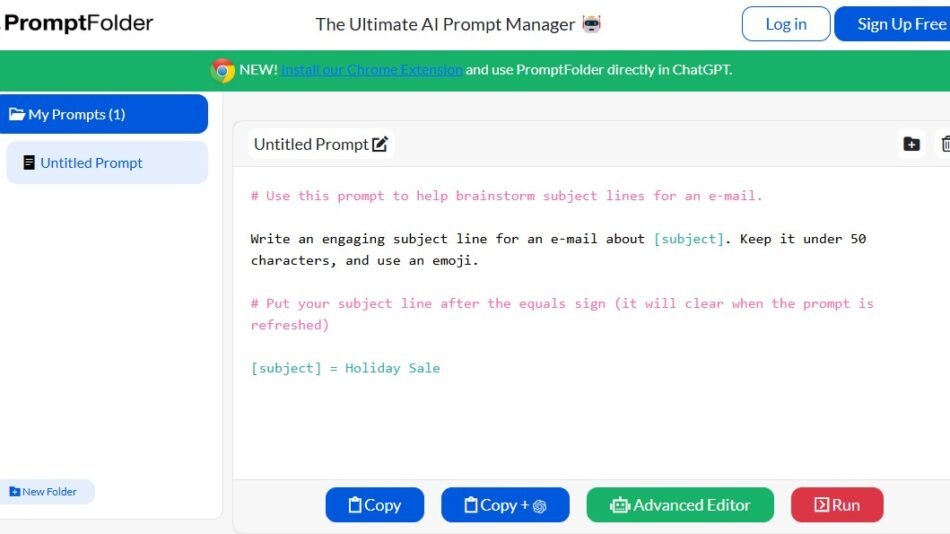

5. Version Control for Prompts

- Track changes to your prompts over time and compare different versions.

- Test and validate the effectiveness of prompt updates using built-in A/B testing tools.

6. Cost Optimization

- Analyze API usage to identify areas where you can reduce costs without sacrificing performance.

- Set budgets and alerts to prevent unexpected overages on paid AI services like OpenAI or Azure OpenAI.

7. Integration with Popular LLM APIs

- Seamlessly integrates with OpenAI, Hugging Face, and other LLM providers.

- Offers support for custom language models and pipelines.

8. Team Collaboration

- Collaborate with team members to debug and optimize workflows together.

- Share insights, reports, and updates within your team for faster iteration.

9. Detailed Analytics Dashboards

- Access visual reports on token usage, response times, error rates, and cost efficiency.

- Use customizable dashboards to focus on metrics that matter most to your team.

10. Security and Compliance

- Built with enterprise-grade security to protect sensitive data.

- Provides tools to anonymize user data and ensure compliance with GDPR, CCPA, and other privacy standards.

How Does LangTrace.ai Work?

LangTrace.ai simplifies the debugging and optimization of LLM workflows. Here’s a step-by-step breakdown of how it works:

1. Connect Your Model

- Integrate LangTrace.ai with your language model APIs, such as OpenAI or Hugging Face.

- Set up pipelines for monitoring and debugging without interrupting production workflows.

2. Monitor Activity

- Use real-time dashboards to track metrics like token usage, latency, error rates, and overall model performance.

- Set up alerts for specific events, such as exceeding token limits or encountering errors.

3. Debug and Optimize Prompts

- Upload or input your prompts into the system.

- Analyze token breakdowns, identify inefficiencies, and receive AI recommendations to refine the prompts.

4. Test and Iterate

- Use A/B testing to compare the effectiveness of different prompt versions.

- Evaluate which prompts deliver better results in terms of accuracy, cost, and user satisfaction.

5. Generate Reports and Insights

- Generate detailed reports to understand trends, issues, and opportunities for improvement.

- Share insights with your team to align on optimization strategies.

Use Cases for LangTrace.ai

LangTrace.ai serves a wide range of users and industries where LLMs are used. Below are some common use cases:

1. API Cost Optimization

- Analyze and reduce token usage to minimize expenses when using paid APIs like OpenAI.

2. Troubleshooting AI Outputs

- Debug prompts that produce inaccurate or irrelevant outputs.

- Identify and fix cases of AI hallucinations or misunderstandings in context.

3. Developing AI Applications

- Optimize LLMs used in chatbots, virtual assistants, or content generation platforms for better user experience.

4. Enhancing Content Generation

- Refine prompts used to generate articles, reports, and other text content, ensuring high-quality and coherent outputs.

5. Improving Customer Support Automation

- Track and optimize chatbot interactions to ensure faster, more accurate responses to customer queries.

6. Enterprise Applications

- Manage and monitor the performance of custom LLM pipelines for enterprise use cases, such as document summarization or sentiment analysis.

Pricing Plans

LangTrace.ai offers a range of pricing plans to cater to businesses and developers of all sizes. Below is an overview of the pricing structure (visit the LangTrace.ai website for the most up-to-date details):

1. Free Plan

- Limited to basic monitoring and debugging features.

- Ideal for small-scale projects or individuals testing the platform.

2. Pro Plan

- Price: Starting at $49/month.

- Includes advanced debugging, prompt optimization, and detailed analytics.

- Suitable for startups and small teams.

3. Business Plan

- Price: Starting at $199/month.

- Offers team collaboration tools, cost optimization features, and API integrations.

- Designed for mid-sized businesses and growing teams.

4. Enterprise Plan

- Custom Pricing: Tailored for large organizations with high-volume API usage and complex workflows.

- Includes dedicated support, advanced security features, and API access for custom integrations.

Strengths of LangTrace.ai

- Comprehensive Debugging Tools

- Simplifies the process of identifying issues and optimizing prompts.

- Cost-Effective

- Helps businesses reduce token usage and optimize LLM interactions, saving on API expenses.

- User-Friendly Interface

- Intuitive design makes it easy for users of all experience levels to debug and optimize their workflows.

- Real-Time Monitoring

- Provides immediate insights and alerts, ensuring smoother operations during production.

- Team Collaboration Features

- Supports collaboration between team members, enabling faster debugging and iteration.

Drawbacks of LangTrace.ai

- Advanced Features Require Paid Plans

- The free version is limited in functionality and may not meet the needs of larger projects.

- Focused on LLM Debugging

- While it excels in LLM optimization, it may not cater to broader AI debugging needs outside of language models.

- Dependent on Integrations

- Users must have access to APIs like OpenAI, which come with their own costs and limitations.

Comparison with Competitors

LangTrace.ai competes with other AI debugging and optimization platforms like PromptLayer, Weaviate, and LangChain. Here’s how it stands out:

- PromptLayer: Focuses on prompt storage and versioning but lacks LangTrace.ai’s advanced debugging and cost optimization features.

- LangChain: Ideal for building custom pipelines, but LangTrace.ai provides better monitoring and prompt-level optimization tools.

- Weaviate: Primarily a vector database tool, whereas LangTrace.ai specializes in debugging and monitoring LLM workflows.

LangTrace.ai is particularly strong for teams and businesses looking for an easy-to-use, all-in-one debugging and optimization platform for language models.

Customer Reviews and Testimonials

Here’s what users have to say about LangTrace.ai:

- John M., AI Developer:

“LangTrace.ai saved us thousands in API costs by helping us optimize our prompts. The token analysis tool is a lifesaver!” - Sophia L., Product Manager:

“The real-time monitoring dashboard is fantastic. We’ve caught issues early thanks to the alerts system.” - David K., Research Scientist:

“Debugging language models used to be tedious, but LangTrace.ai has made it so much easier. Highly recommend it to anyone working with GPT models.”

Conclusion

LangTrace.ai is a game-changing platform for anyone working with AI language models. Its advanced debugging tools, real-time monitoring, and cost optimization features make it an invaluable resource for developers, businesses, and researchers aiming to get the best performance out of their LLM workflows.

By offering detailed insights into token usage, prompt effectiveness, and error tracking, LangTrace.ai not only saves time but also significantly reduces costs for users working with paid APIs.

Ready to streamline your language model workflows? Visit the official LangTrace.ai website to get started and take your AI projects to the next level.