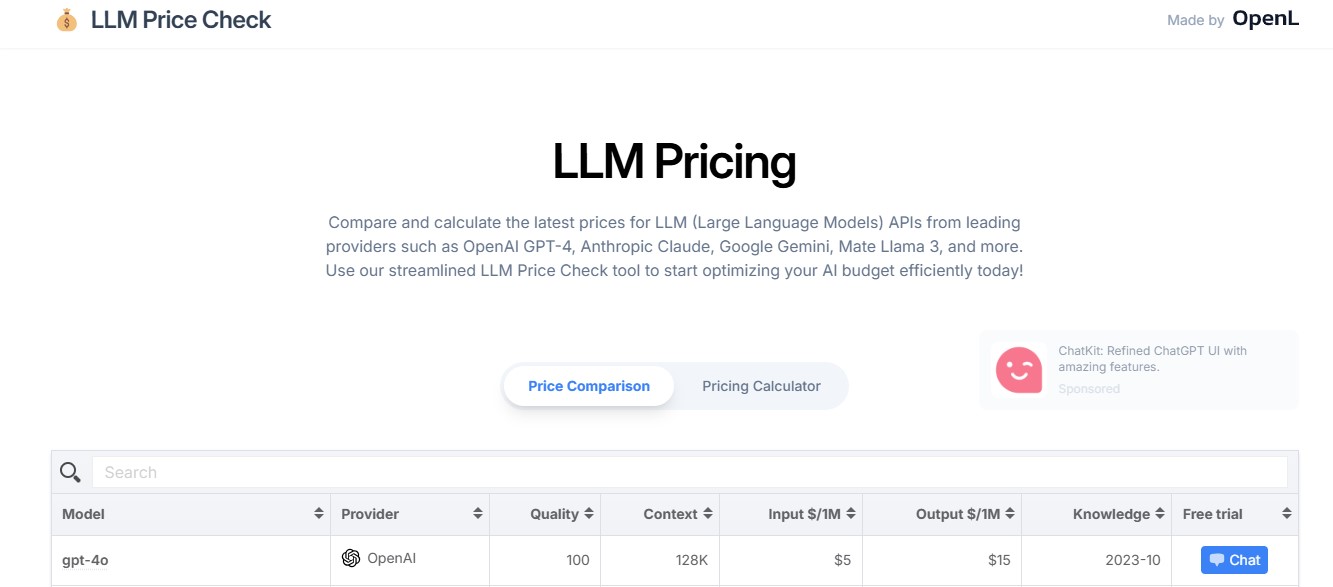

LLM Price Check is a unique platform designed to streamline the process of comparing costs for large language model (LLM) APIs. With the growing popularity of AI models such as OpenAI’s GPT and Anthropic’s Claude, pricing transparency is critical for businesses and developers. LLM Price Check offers an easy-to-navigate interface to assess costs, features, and suitability across different providers.

In this article, we will explore its features, functionality, pricing structure, use cases, strengths, drawbacks, customer reviews, and comparisons with alternative tools.

Features

- Comprehensive Pricing Comparison

- Offers a detailed side-by-side comparison of popular LLM providers like OpenAI, Anthropic, and more.

- Customizable Filters

- Users can narrow their search by factors such as model type, token limits, pricing tiers, and usage scenarios.

- Up-to-Date Information

- Regular updates ensure the pricing and feature data reflect current market trends and provider offerings.

- Transparent Cost Calculations

- Provides token-level or query-level pricing to help estimate actual usage costs accurately.

- Wide Coverage of Models

- Includes comparisons for GPT-4, GPT-3.5, Claude 2, and other emerging LLMs.

- User-Friendly Interface

- An intuitive design allows both beginners and advanced users to find pricing information effortlessly.

How It Works

- Search

Users input the LLM model or API they are interested in comparing. - Filter

Apply filters like usage limits, type of model (e.g., conversational or generative), or deployment options. - Compare

View a side-by-side comparison of pricing, feature sets, and other specifications. - Select and Plan

Identify the most cost-effective API based on usage and operational requirements.

Use Cases

- Developers: To budget and select APIs for projects requiring natural language processing.

- Businesses: To find cost-efficient AI services for customer service, content creation, or analytics.

- Researchers: To explore affordable APIs for academic experiments.

- Educators: For teaching students about AI using budget-friendly APIs.

Pricing

LLM Price Check itself is free to use, but the tool focuses on showing the costs of various LLM APIs. Pricing structures displayed may include:

- OpenAI: Starting at $0.03 per 1,000 tokens for GPT-3.5 Turbo.

- Anthropic’s Claude: From $1.63 per million tokens.

- Other APIs: Prices vary depending on provider, model, and token limits.

(Exact details may vary—check the website for current data.)

Strengths

- Transparency: Real-time updates and comprehensive cost breakdowns provide clarity.

- Accessibility: Free and easy to use for individuals and teams.

- Time-Saving: Reduces the need to visit multiple provider websites for comparison.

- Support for Multiple Models: Extensive database covering a range of LLMs.

Drawbacks

- Limited Advanced Analytics: Does not provide deep insights into performance benchmarks or usage patterns.

- Reliance on Provider Data: Accuracy depends on timely updates from LLM providers.

- Focused Scope: Exclusively pricing-focused; lacks implementation guidance or performance tips.

Comparison with Other Tools

- Alternatives: Tools like Hugging Face’s model hub and OpenAI’s pricing pages provide individual provider details but lack direct comparisons.

- LLM Price Check Advantage: Its comparative approach and user-friendly interface make it unique and efficient.

Customer Reviews and Testimonials

- Tech Developer: “LLM Price Check saved me hours of research and helped me choose the right LLM for my startup.”

- Business Analyst: “A must-use tool for finding cost-effective API solutions. Simple and efficient!”

- Researcher: “I appreciated the clear breakdown of token-based pricing, which helped plan my budget effectively.”

Conclusion

LLM Price Check simplifies the process of finding cost-efficient APIs for businesses, developers, and researchers. Its transparent, up-to-date, and user-friendly design ensures users can make informed decisions in minutes. While the platform is limited to pricing comparisons, its focused utility makes it an indispensable tool for those in the AI domain.

Afrikaans

Afrikaans Albanian

Albanian Amharic

Amharic Arabic

Arabic Armenian

Armenian Azerbaijani

Azerbaijani Basque

Basque Belarusian

Belarusian Bengali

Bengali Bosnian

Bosnian Bulgarian

Bulgarian Catalan

Catalan Cebuano

Cebuano Chichewa

Chichewa Chinese (Simplified)

Chinese (Simplified) Chinese (Traditional)

Chinese (Traditional) Corsican

Corsican Croatian

Croatian Czech

Czech Danish

Danish Dutch

Dutch English

English Esperanto

Esperanto Estonian

Estonian Filipino

Filipino Finnish

Finnish French

French Frisian

Frisian Galician

Galician Georgian

Georgian German

German Greek

Greek Gujarati

Gujarati Haitian Creole

Haitian Creole Hausa

Hausa Hawaiian

Hawaiian Hebrew

Hebrew Hindi

Hindi Hmong

Hmong Hungarian

Hungarian Icelandic

Icelandic Igbo

Igbo Indonesian

Indonesian Irish

Irish Italian

Italian Japanese

Japanese Javanese

Javanese Kannada

Kannada Kazakh

Kazakh Khmer

Khmer Korean

Korean Kurdish (Kurmanji)

Kurdish (Kurmanji) Kyrgyz

Kyrgyz Lao

Lao Latin

Latin Latvian

Latvian Lithuanian

Lithuanian Luxembourgish

Luxembourgish Macedonian

Macedonian Malagasy

Malagasy Malay

Malay Malayalam

Malayalam Maltese

Maltese Maori

Maori Marathi

Marathi Mongolian

Mongolian Myanmar (Burmese)

Myanmar (Burmese) Nepali

Nepali Norwegian

Norwegian Pashto

Pashto Persian

Persian Polish

Polish Portuguese

Portuguese Punjabi

Punjabi Romanian

Romanian Russian

Russian Samoan

Samoan Scottish Gaelic

Scottish Gaelic Serbian

Serbian Sesotho

Sesotho Shona

Shona Sindhi

Sindhi Sinhala

Sinhala Slovak

Slovak Slovenian

Slovenian Somali

Somali Spanish

Spanish Sundanese

Sundanese Swahili

Swahili Swedish

Swedish Tajik

Tajik Tamil

Tamil Telugu

Telugu Thai

Thai Turkish

Turkish Ukrainian

Ukrainian Urdu

Urdu Uzbek

Uzbek Vietnamese

Vietnamese Welsh

Welsh Xhosa

Xhosa Yiddish

Yiddish Yoruba

Yoruba Zulu

Zulu